Japan EN

Japan EN Japan EN

Japan ENJasmeen Kaur

Jr. Associate , Montreal

Data

The fact that the financial services industry is one of the most targeted industries by cyber-attacks is well known, as 71% of all data breaches have financial motivations. As the frequency and scale of cyber-attacks have increased year-over-year, records show that the average total cost of a data breach worldwide in 2024 was $6.08 million per incident.

When it comes to AI-driven data ecosystems, businesses opt for “live experimentation” for growth and innovation – but in the wake of massive data exfiltration attacks, this experimentation can come with significant risks both from a financial and reputational perspective. To navigate the balance of innovation with the inevitability of cyber incidents, successful approaches to AI security will be marrying exploration of new markets, testing novel ideas, and adapting swiftly to changing customer needs while remaining resilient from cyber-attacks and exposures.

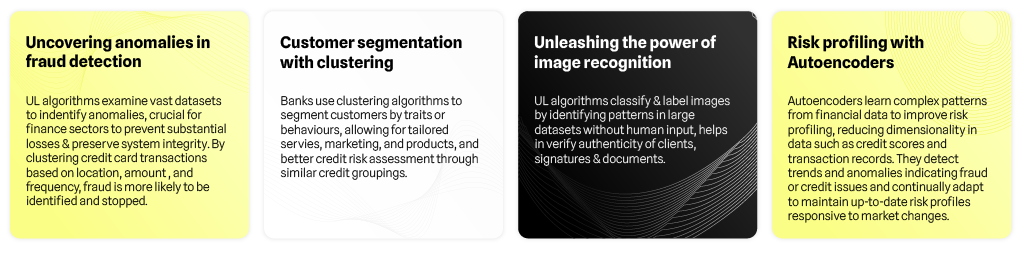

Unsupervised learning (UL) AI models are particularly vulnerable to cyber threats due to less attention on their security compared to supervised and reinforcement learning, posing risks to financial integrity and user privacy. The quality of data powering AI is often underestimated, yet it's crucial for model performance and ethical standards. There's an urgent need to enhance AI security, this includes a defense-in-depth strategy that integrates multiple layers of security throughout the operational lifecycle and software development lifecycle (SDLC). Especially for UL, as current defenses fall short against sophisticated adversarial attacks, highlighting the necessity for robust, secure AI development.

Unsupervised AI models utilize types of machine learning (ML) which learns from data itself without any human supervision. In these models, unlabeled data reveals hidden patterns or data groupings. Some of the AI models using UL are as follows:

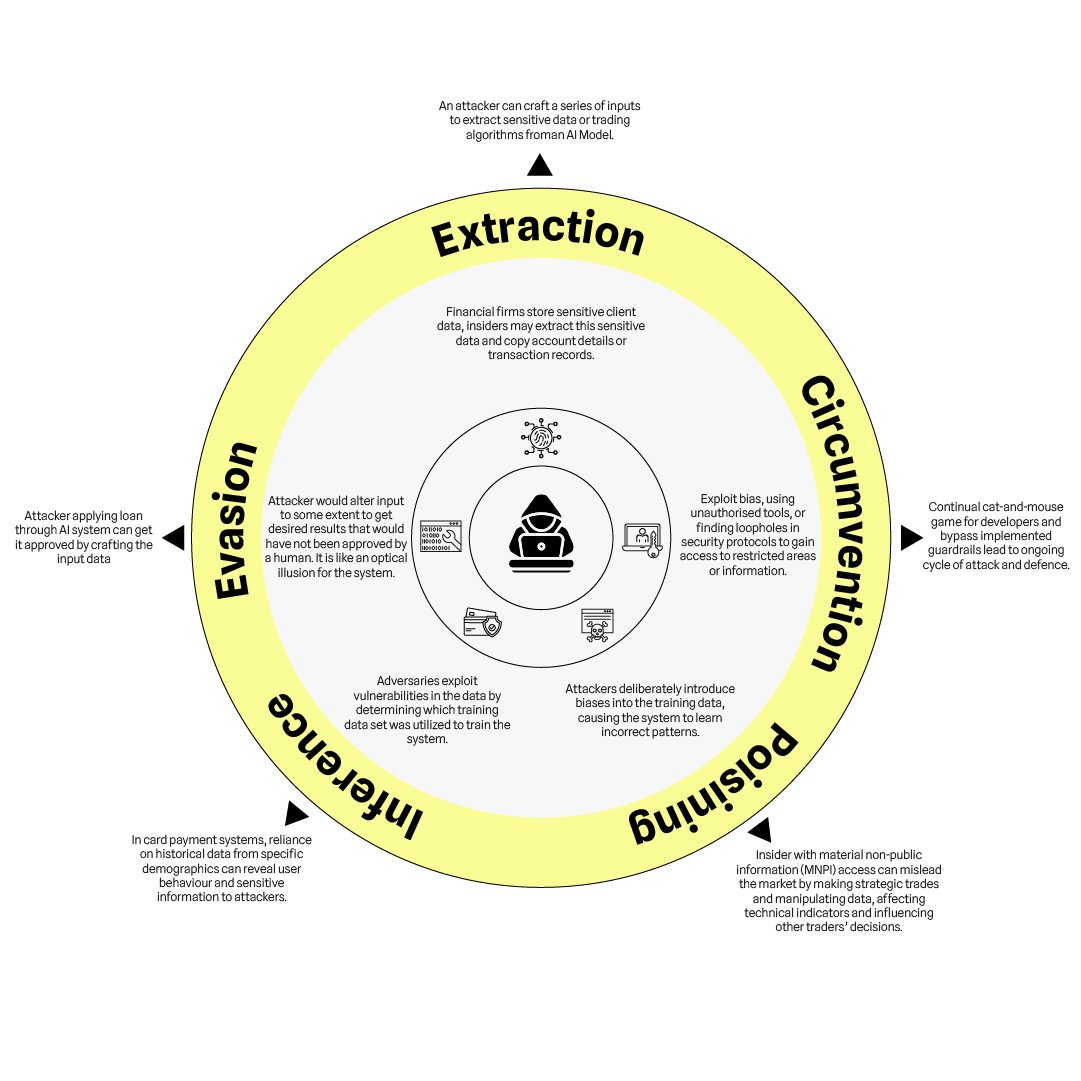

Adversarial Attack: An attacker disrupts machine learning model’s classification ability by injecting faulty input, harming business applications. For example, if an attacker mimics a maintenance worker's routine recognized by a security camera's ML model, they could gain unauthorized access without triggering alarms, exploiting the model's vulnerability to overlook threats. Examples of adversarial attacks are mentioned below:

Key defensive methods are essential to prevent vulnerabilities from being exploited. But these methods rely more on positive testing than negative testing. If vulnerabilities, flaws, and weaknesses can be found and fixed through negative test cases, before it impacts end users along with regular maintenance, the malicious actor can be thwarted. The following are the main defensive methods to avoid from exploitation.

Adversarial training: A process by which the model is fed intentionally with faulty inputs adversarial examples, which cause machine learning models to fail. Following that, the model classifies these known harmful inputs as threats. This is akin to how a machine learning model trains itself to classify data as part of its regular process, and it also teaches itself to reject disturbances. Continuous maintenance and supervision for this approach has utmost importance since the attempts to modify the machine learning model progress.

Defensive distillation: This can be done by training the teacher & student network. Using standard training protocols, a teacher neural network must first be trained on the original dataset. The class probabilities of the teacher network, which are more informative than the hard labels after training, are employed as soft objectives for the following stage. These targets gathered are then used to train a student network, which may or may not have the same architecture as the Teacher Network. By imitating the teacher's output distribution—which includes the confidence levels for every class—the student network becomes more adept at generalizing.

Real-time monitoring: Real-time monitoring of prompts and responses can assist in promptly identifying non-compliant responses, much to how public surveillance cameras are used for crime control and prevention. Automated systems that identify potentially hazardous or non-compliant content can be used to do this.

Proactive monitoring of circumvention techniques: One of the most important aspects of surveillance is comprehending and evading network censorship. To gain access to restricted websites or services, one can employ methods like switching DNS providers, utilizing VPNs, or setting up proxy servers for messaging applications. These methods can also be applied to keep an eye on possible abusers' behavior.

Attribute based access control (ABAC): ABAC can help design policies that limit access to contextual datasets and categories of data that may require advanced sensitivity and stronger reinforcement by layered entitlement defenses. For example, customer records requiring specific segmentation for different data elements in the data record and for different consumption patterns, including the user's role, location, affiliated organization, time of day, or any other topical controls.

Implementing reliable AI concepts, validating models and utilizing data poisoning detection technologies is recommended.

An attack architecture for AI systems called “Adversarial Threat Landscape for Artificial-Intelligence Systems" (ATLAS) was developed by MITRE (in collaboration with Microsoft and 11 other businesses). It describes 12 levels of attacks on machine learning systems.

Counterfeit announced by Microsoft, is an open-source automation tool that can be used by red team operations. This tool tests AI systems security. This tool can also be used in the AI development process to identify vulnerabilities prior to their release into production.

Additionally, an open-source defensive tool for adversarial robustness by IBM is currently managed as a Linux Foundation project. This project includes 39 attack modules that are divided into four main categories: evasion, poisoning, extraction, and inference. It also supports all popular ML frameworks.

In conclusion, as AI starts to influence many aspects of our lives, the security of unsupervised learning models becomes crucial. Even if current defensive techniques like defensive distillation and adversarial training provide some protection, they have gaps, drawbacks and trade-offs that call for more research and development. Although new tools and technologies have the potential to improve AI security, attackers and defenders are still in the arms race. The industry must place a high priority on creating reliable, secure AI systems to protect against changing threats and guarantee the security and dependability of AI applications.

At Synechron, we specialize in providing tailored solutions to enhance your data pipeline and make use of new AI capabilities. Leveraging our extensive experience in technology consulting within the financial services sector, we have cultivated an in-depth understanding of this industry’s nuances. Our proficiency in implementing advanced analytics solutions, coupled with our industry expertise, positions us uniquely to assist you to make the most from your data. We are committed to propelling your business to unprecedented heights of success.

If you are interested in learning more about hyper-personalization or Synechron’s data & analytics services, please reach out to our local leadership team.

REFERENCES