Hong Kong EN

Hong Kong EN Hong Kong EN

Hong Kong ENPrabhakar Srinivasan

Director of Technology, Innovation and Co-Lead of the AI Practice , Synechron

Sujith Vemishetty

Lead, Data Science, Bangalore Innovation , Synechron

Artificial Intelligence

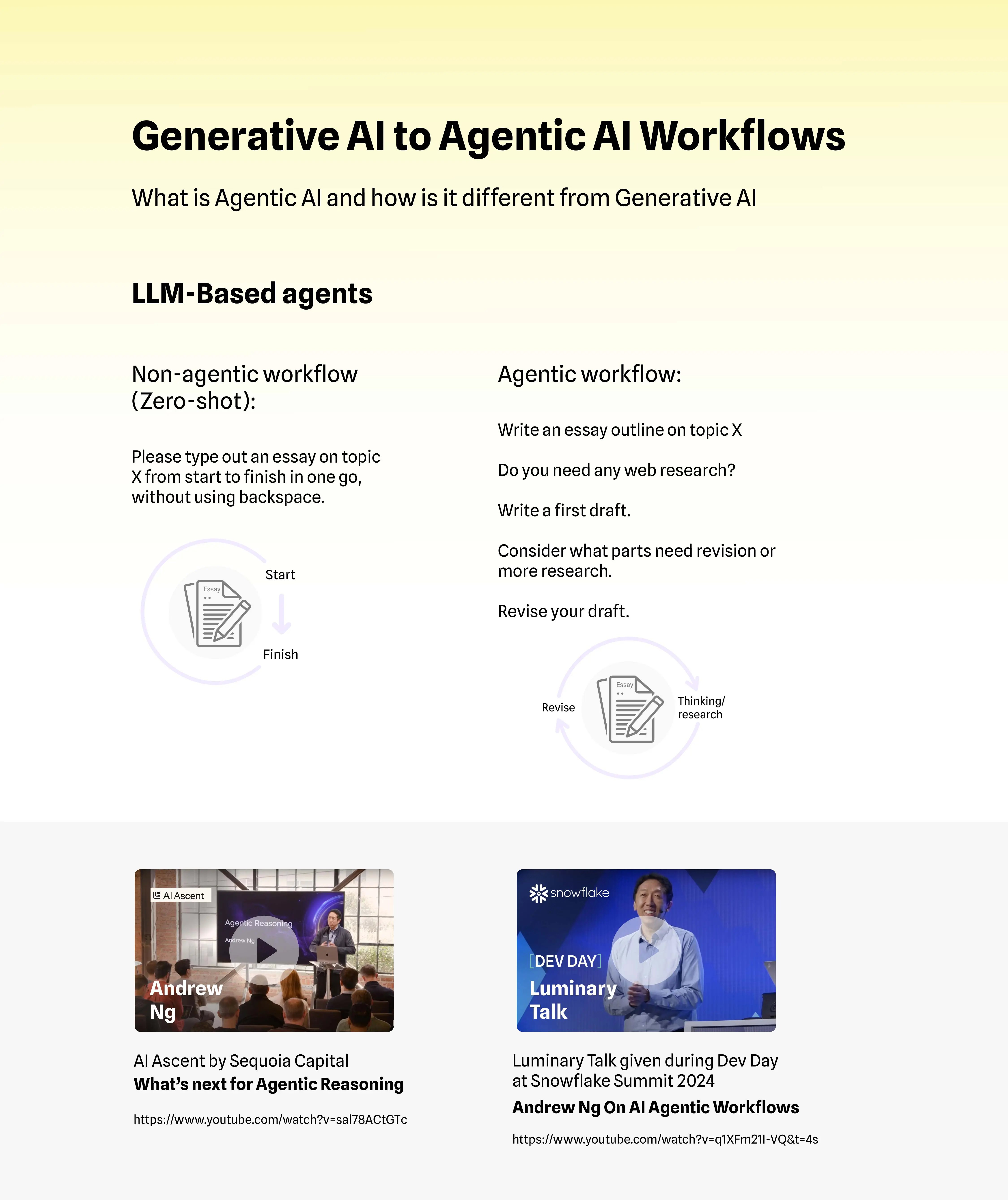

Generative AI (GenAI) has traditionally been about a highly capable generative model responding to a simple user prompt or question. These GenAI models, despite their power, are limited, and have not been able to perform tasks autonomously.

Traditionally, Large Language Models (LLMs) have only been able to generate content using next word prediction and one-shot generation, with LLMs lacking a mechanism to iterate or review their own output. This method of using LLMs is sometimes called “frozen” model-based generation.

Where previously a mechanism called ‘function calling’ has been used to allow developers to describe the functions and actions that these models take, like performing calculations, or making an order, Agentic AI frameworks can now leverage this function-calling capability, allowing LLMs to handle complex workflows with much more autonomy and generate better quality output.

The above illustration indicates this shift in focus from GenAI-powered ‘co-pilots' to more autonomous Agentic AI. Complex enterprise problems that don’t fit into linear workflows and require iterations based on introspection, evaluation and feedback can now be solved more efficiently, with notable examples including app modernization, HR workflow management and virtual assistants.

Agentic AI can be applied across multiple domains to simplify workloads. For example, in the HR domain, an Agentic AI can be deployed to handle common administrative tasks like recruitment and selection, employee onboarding, employee relations, performance management, training and development, compensation and benefits, HR policy and compliance. This frees up time for human HR professionals to focus on the more complicated tasks that require human input.

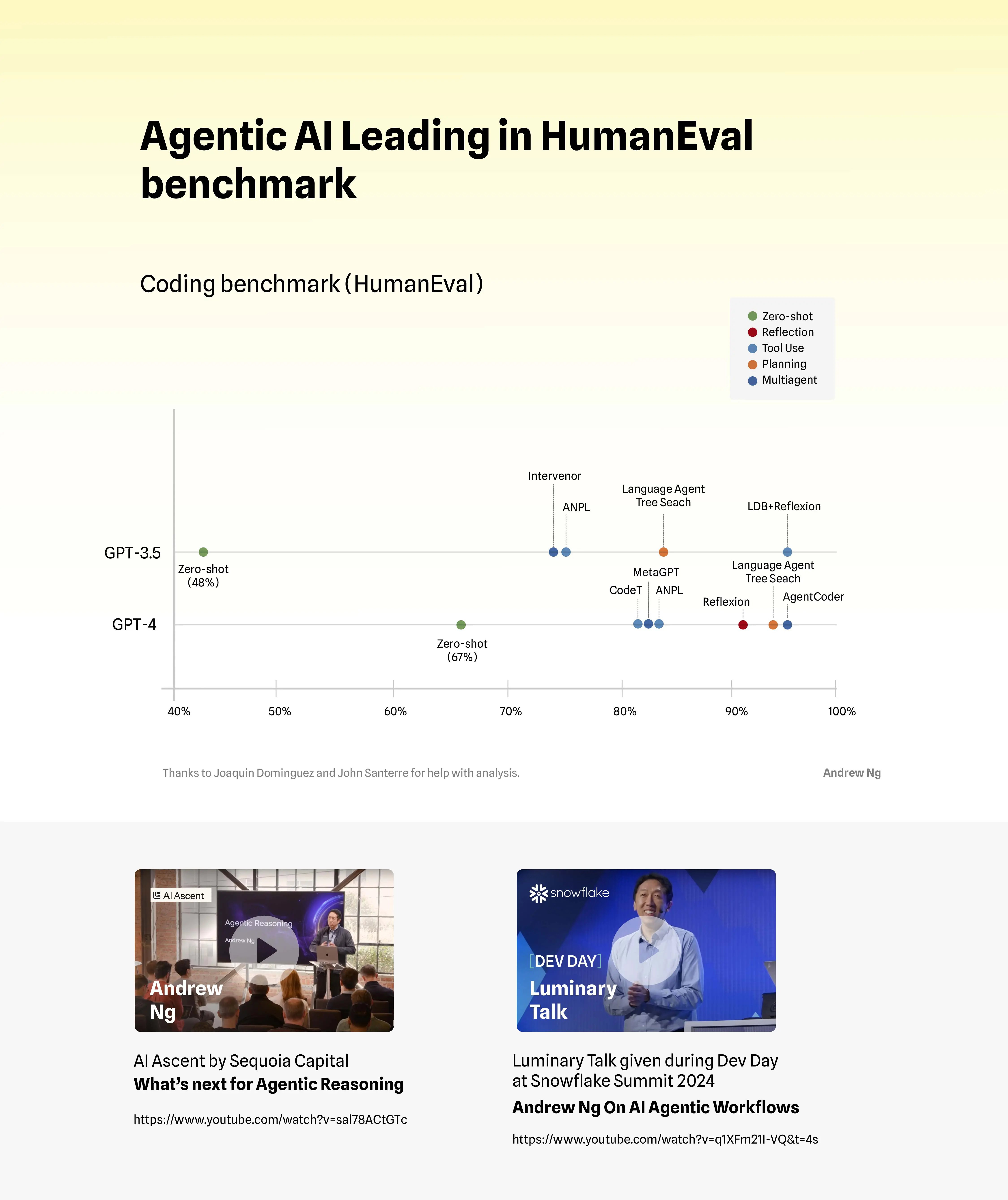

A mixture of agents has already excelled at coding tasks, compared to GPT-4 (illustrated below).

A great example of AI agents in action is ‘Devin AI’, which according to its creators at Cognition AI, is “the world’s first fully autonomous AI software engineer.” Devin AI has been able to successfully implement a software project fully autonomously, end-to-end, from writing an implementation plan to creating a website. This trend of moving away from simple chatbots that deal with simple questions or tasks, to feature-rich agents that can complete complex tasks autonomously, is genuinely a new frontier – and Devin AI, with its remarkable capabilities, has given us a peek at the art of the possible.

Advancements in reinforcement learning (RL), a branch of AI that focuses on creating self-teaching autonomous systems that learn through trial and error, has made it possible to deploy AI agents in real-world applications. Autonomous cars, industrial robots, and gaming are just a few examples of recent applications. Additionally, the advent of LLMs such as ChatGPT have significantly increased the capacity of AI agents within a very short period – and they’ve done this through their superior ability to understand and generate human-like text or speech and consume a large knowledge base.

Combined progress in these domains has made AI agents the dominant area in recent AI research and development, with the introduction of frameworks like LangChain further enhancing the implementation of these AI agents. LangChain provides various tools for the simple building of LLM-driven applications, supercharging the adoption of AI agent technology.

Further, driving this progress has been the introduction of frameworks like LangGraph, Autogen, CrewAI, and GoEX, that can create full-blown, robust, Agentic AI workflows with multiple agents. These frameworks include features like Memory, Agent-to-Agent Communication, Human-in-the-loop, Caching, Agent Observability, and Task Decomposition. These sophisticated constructs are necessary for the building and scaling of enterprise-ready Agentic AI applications.

There are four popular design patterns in Agentic AI workflows:

Andrew Ng, founder of DeepLearning.AI, recently said that agent-based workflows can even be a path to Artificial General Intelligence (AGI), the Holy Grail of AI research. He believes that AI agent workflows, collaborating and iteratively working on a given problem, will drive massive progress in AI this year – probably more than the next generation of foundation models like the successors to GPT-4. An example of applied AGI could be a personal assistant using human-like cognitive abilities to understand and respond to a wide range of tasks and questions using natural language.

Another AI pioneer, Turing Award Winner, Yann LeCun, also sees AI agents as a potential tool for achieving better intelligent machines. Meta AI, where Yann is the chief AI scientist, launched ‘CICERO’ in 2022, as the first AI agent to operate at a human level in ‘Diplomacy’, a strategy game that requires significant negotiation skills. Yann, while betting heavily on AI agents, doubts that LLMs are the most promising path towards AGI, due to what he calls “the limits of language”.

Setting aside whether AGI might be achievable through AI agents, the transformative capabilities that agents like CICERO and Devin have demonstrated in recent years indicates both that they can be deployed in multiple domains to reduce workloads and simplify processes – and that they’re here to stay.